Fine-tune FLUX.1

Fine-tuning is now available on Replicate for the FLUX.1 [dev] image model. Here’s what that means, and how to do it.

8/16/20248 min read

We have fine-tuning for FLUX.1

FLUX.1 is a family of text-to-image models released by Black Forest Labs this summer. The FLUX.1 models set a new standard for open-weights image models: they can generate realistic hands, legible text, and even the strangely hard task of “funny memes.” You can now fine-tune your model on Replicate with the FLUX.1 Dev LoRA Trainer.

Fine-Tuning FLUX.1 on Replicate

What does it mean to fine-tune FLUX.1 on Replicate? Large image generation models like FLUX.1 and Stable Diffusion are trained on vast datasets of images with added noise. These models learn the reverse process of noise addition, which astonishingly translates into image creation.

But how do these models know what image to generate? They leverage transformer models like CLIP and T5, which are trained on extensive image-caption pairs. These models are language-to-image encoders, learning to map an image and its corresponding caption to the same point in high-dimensional space. When provided with a text prompt, such as "squirrel reading a newspaper in the park," they can translate that into patterns of pixels in a grid. To the encoder, the image and the caption are essentially the same entity.

The image generation process involves taking some initial pixels, moving them away from noise, and toward the pattern specified by the text input, repeating this process until the desired image is formed.

Fine-tuning, on the other hand, involves updating the model's internal mapping using each image-caption pair from your dataset. This process allows you to teach the model anything that can be represented through image-caption pairs, such as characters, settings, mediums, styles, or genres. During training, the model learns to associate your concept with a particular text string, which can then be used in a prompt to activate that association.

For example, if you want to fine-tune the model to recognize your comic book superhero, you'll gather a dataset of images featuring your character in various settings, costumes, lighting, and art styles. This diverse collection helps the model understand that it's learning about a specific character rather than any incidental details.

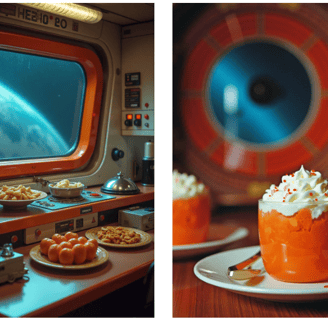

Next, choose a unique trigger word or phrase that won't conflict with other concepts or fine-tunes. Something distinct like "bad 70s food" or "JELLOMOLD" works well. After training, when you prompt "Establishing shot of bad 70s food at a party in San Francisco," your model will recall your specific concept. It really can be that simple.

We discovered that the Replicate platform makes fine-tuning as easy as uploading images, and we can even handle the captioning for you.

If you're not familiar with Replicate, it simplifies running AI as an API. You don't need to hunt for powerful GPUs, manage environments and containers, or worry about scaling. You write straightforward code with standard APIs and pay only for what you use.

You can try this out right now! It doesn’t require a large number of images. Explore our examples gallery to see the styles and characters others have created. Grab a few photos of your pet or favorite houseplant, and let’s get started.

How to Fine-Tune FLUX.1

Fine-tuning FLUX.1 on Replicate is a straightforward process that can be done either through the web interface or programmatically via the API. Here’s how to go about it:

Step 1: Prepare Your Training Data

Start by gathering a collection of images that represent the concept you want to teach the model. These images should be diverse enough to cover different aspects of the concept. For example, if you’re fine-tuning on a specific character, include images in various settings, poses, and lighting. Here are some guidelines to follow:

- Number of Images: Use 12-20 images for optimal results.

- Image Size: Use large images if possible for better quality.

- Formats: Use JPEG or PNG formats.

- Captions (Optional): You can create a corresponding `.txt` file for each image with the same name, containing the caption.

Once your images (and optional captions) are ready, zip them into a single file.

Step 2: Start the Training Process

Using the Web Interface:

1. Access the Trainer:

- Navigate to the FLUX.1 Dev LoRA Trainer on Replicate.

2. Model Selection:

- Select an existing model as your destination or create a new one by typing the name in the model selector field.

3. Upload Training Data:

- Upload the zip file containing your training data as the `input_images`.

4. Set Up Training Parameters:

- Trigger Word: Choose a string that isn't a real word or is related to the concept, such as "TOK" or "CYBRPNK." This trigger word will be associated with all images during training and can be used in prompts to activate your concept in the fine-tuned model.

- Steps: Start with 1000 steps for a balanced training process.

- Other Parameters: Leave the `learning_rate`, `batch_size`, and `resolution` at their default values.

- Autocaptioning: Leave this enabled unless you prefer to provide your own captions.

5. Save Model (Optional):

- If you want to save your model on Hugging Face, enter your Hugging Face token and set the Repo ID.

6. Begin Training:

- Once you’ve filled out the form, click `Create training` to start the fine-tuning process.

Once the training is complete, your fine-tuned FLUX.1 model will be ready to use.

How to Fine-Tune FLUX.1 Using the API

Fine-tuning FLUX.1 through the API on Replicate is a straightforward process that requires setting up your environment, creating a model, and starting the training process. Here’s how to do it:

Step 1: Set Up Your Environment

First, ensure that your `REPLICATE_API_TOKEN` is set in your environment. You can find this token in your Replicate account settings.

```bash

export REPLICATE_API_TOKEN=r8_***************************

```

Step 2: Create a New Model

Next, create a new model that will serve as the destination for your fine-tuned weights. This is where your trained model will be stored once the fine-tuning process is complete.

```python

import replicate

#Create a new model

model = replicate.models.create(

owner="yourusername",

name="flux-your-model-name",

visibility="public", # or "private" if you prefer

hardware="gpu-t4", # Replicate will override this for fine-tuned models

description="A fine-tuned FLUX.1 model"

)

print(f"Model created: {model.name}")

print(f"Model URL: https://replicate.com/{model.owner}/{model.name}")

```

This code creates a new model on Replicate. You can choose to make the model public or private based on your preferences.

Step 3: Start the Training Process

With your model set up, you can now start the training process by creating a new training run. You’ll need to provide the input images, the number of training steps, and any other desired parameters.

```python

# Start the training process

training = replicate.trainings.create(

version="ostris/flux-dev-lora-trainer:4ffd32160efd92e956d39c5338a9b8fbafca58e03f791f6d8011f3e20e8ea6fa",

input={

"input_images": open("/path/to/your/local/training-images.zip", "rb"),

"steps": 1000,

"hf_token": "YOUR_HUGGING_FACE_TOKEN", # optional

"hf_repo_id": "YOUR_HUGGING_FACE_REPO_ID", # optional

},

destination=f"{model.owner}/{model.name}"

)

print(f"Training started: {training.status}")

print(f"Training URL: https://replicate.com/p/{training.id}")

```

- `version`: This specifies the FLUX.1 Dev LoRA Trainer version you’re using for the fine-tuning process.

- `input_images`: Provide the path to your zipped training images.

- `steps`: Set the number of training steps (1000 is a good starting point).

- `hf_token` and `hf_repo_id`: If you want to save your model on Hugging Face, include these optional parameters.

Once you run this code, your training process will start, and you’ll receive a URL where you can track the progress.

Step 4: Monitor Training Progress

Training typically takes 20-30 minutes and costs under $2. During this time, you can monitor the status and progress of your training run via the provided URL.

Note: The hardware you pick initially doesn't matter because Replicate automatically routes FLUX.1 fine-tunes to H100s, ensuring efficient and fast training.

Using Your Trained Model

Once your FLUX.1 model has been fine-tuned and training is complete, you can easily use it on Replicate just like any other model. Here’s how:

Using the Web Interface

1. Navigate to Your Model Page:

- Go to your model’s page on Replicate. For example, if your username is `yourusername` and your model is named `flux-your-model-name`, visit: `https://replicate.com/yourusername/flux-your-model-name`.

2. Input Your Prompt:

- In the prompt input field, include the trigger word you used during fine-tuning (e.g., “bad 70s food”). This will activate the fine-tuned concept.

3. Adjust Inputs:

- Modify any other input settings as needed, such as image size, resolution, or additional prompt details.

4. Generate Your Image:

- Click the “Run” button to generate an image using your fine-tuned model.

Your model will then process the request and produce an image based on the prompt and fine-tuning you provided.

Generating Images with Your Trained Model via the API

Once you've fine-tuned your FLUX.1 model, you can generate images programmatically using the Replicate Python client. Here's how to do it:

Step 1: Generate Images with Your Model

Use the following Python code to generate images with your fine-tuned model:

```python

import replicate

output = replicate.run(

"yourusername/flux-your-model-name:version_id",

input={

"prompt": "A portrait photo of a space station, bad 70s food",

"num_inference_steps": 28,

"guidance_scale": 7.5,

"model": "dev", # Use "schnell" for faster inference

}

)

print(f"Generated image URL: {output}")

```

- `yourusername/flux-your-model-name:version_id`: Replace this with your actual model details, which you can find on your model's page under the "API" tab.

- `prompt`: The text prompt that includes your trigger word to activate the fine-tuned concept.

- `num_inference_steps`: Controls the number of steps for generating the image. Higher numbers typically result in more detailed images.

- `guidance_scale`: Controls how closely the model should follow the prompt. A typical value is around 7.5.

- `model`: Set this to `"dev"` for the default FLUX.1 Dev model or `"schnell"` for faster, less resource-intensive inference.

Step 2: Using FLUX.1 Schnell for Faster Inference

If you want to generate images faster and at a lower cost, you can use the smaller FLUX.1 Schnell model. Here’s how to do it:

- Change the `model` parameter from `"dev"` to `"schnell"`.

- Reduce the number of inference steps to a smaller value, such as 4, for quicker results.

Note: Even when using FLUX.1 Schnell, the outputs will still be subject to the non-commercial license of FLUX.1 Dev.

This approach allows you to efficiently generate images using your fine-tuned model with flexibility in performance and cost.

Costs

Fine-tuned FLUX.1 models on Replicate are charged per second, for both fine-tuning and generating images. The total time a fine-tuning run will take varies based on the number of steps you train.

Check Replicate's pricing page for more details.

Examples and use cases

Check out examples gallery for inspiration. You can see how others have fine-tuned FLUX.1 to create different styles, characters, a never-ending parade of cute animals, and more.

Conclusion

Fine-tuning FLUX.1 on Replicate offers a powerful and accessible way to craft specialized image generation models tailored to your creative needs. Whether you're an AI engineer pushing the boundaries of what's possible or a multimedia artist seeking to create unique works, this process unlocks a world of new possibilities.

Stay tuned for future updates and features as we continue to enhance the fine-tuning experience. Give the FLUX.1 Dev LoRA Trainer a try, and we can't wait to see what you create!

Happy training!

Example Prompts and Results

Prompt:

"A photo, editorial avant-garde dramatic action pose of a white person wearing 60s round wacky sunglasses with gemstones hanging pulling glasses down looking forward, in Italy at sunset with a vibrant illustrated jacket surrounded by illustrations of flowers, smoke, flames, ice cream, sparkles, rock and roll"

Prompt:

"a boat in the style of TOK"

We are a non-profit community of AI enthusiasts and researchers dedicated to exploring and advancing the latest in generative AI technology. We are not affiliated with BlackForestLabs.ai